Trustworthy AI

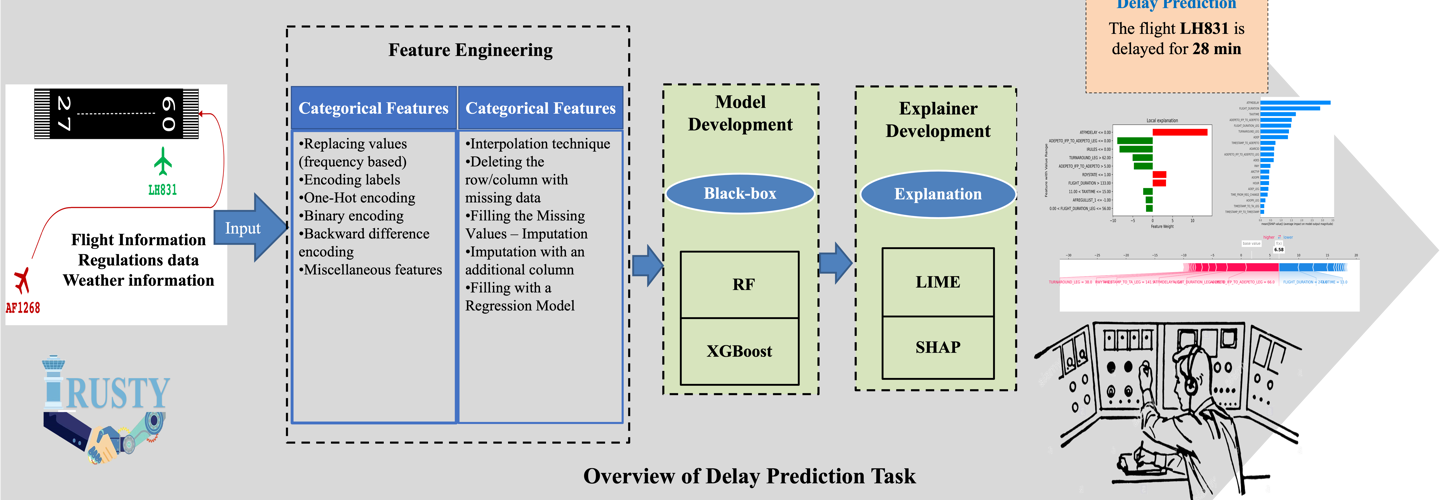

XAI addresses the trust issue of AI systems being treated as black boxes, which do not provide insights into how decisions are made, the main causes, or the responsible samples.

IVAs 100-lista 2023

XAI addresses the trust issue of AI systems being treated as black boxes, which do not provide insights into how decisions are made, the main causes, or the responsible samples. Explainability is a key component of Trustworthy AI in the EU Ethics Guidelines. Current research focuses on enhancing AI systems by increasing their transparency and explainability. This involves providing clear explanations of how AI systems operate, including their decision-making processes and data usage.

XAI helps build trust by enabling users to understand how AI systems function, including their decision-making processes and data usage. Transparency promotes accountability by ensuring AI systems are responsibly designed and implemented with appropriate oversight and governance mechanisms in place. Clear explanations of how decisions are made are useful in informing stakeholders. Transparency helps developers identify and rectify issues with AI systems, enabling debugging and improvement over time.